WebSocket connections started failing for Safari/iOS 26 users. The browser was sending HTTP CONNECT requests instead of proper WebSocket upgrade requests, breaking connections through reverse proxies.

Create a chatbot that summarizes Slack conversations and answers questions using DigitalOcean's Gradient Platform.

Easily create valuable AI-powered agents that can retrieve and process real-time information using DigitalOcean's Gradient Platform.

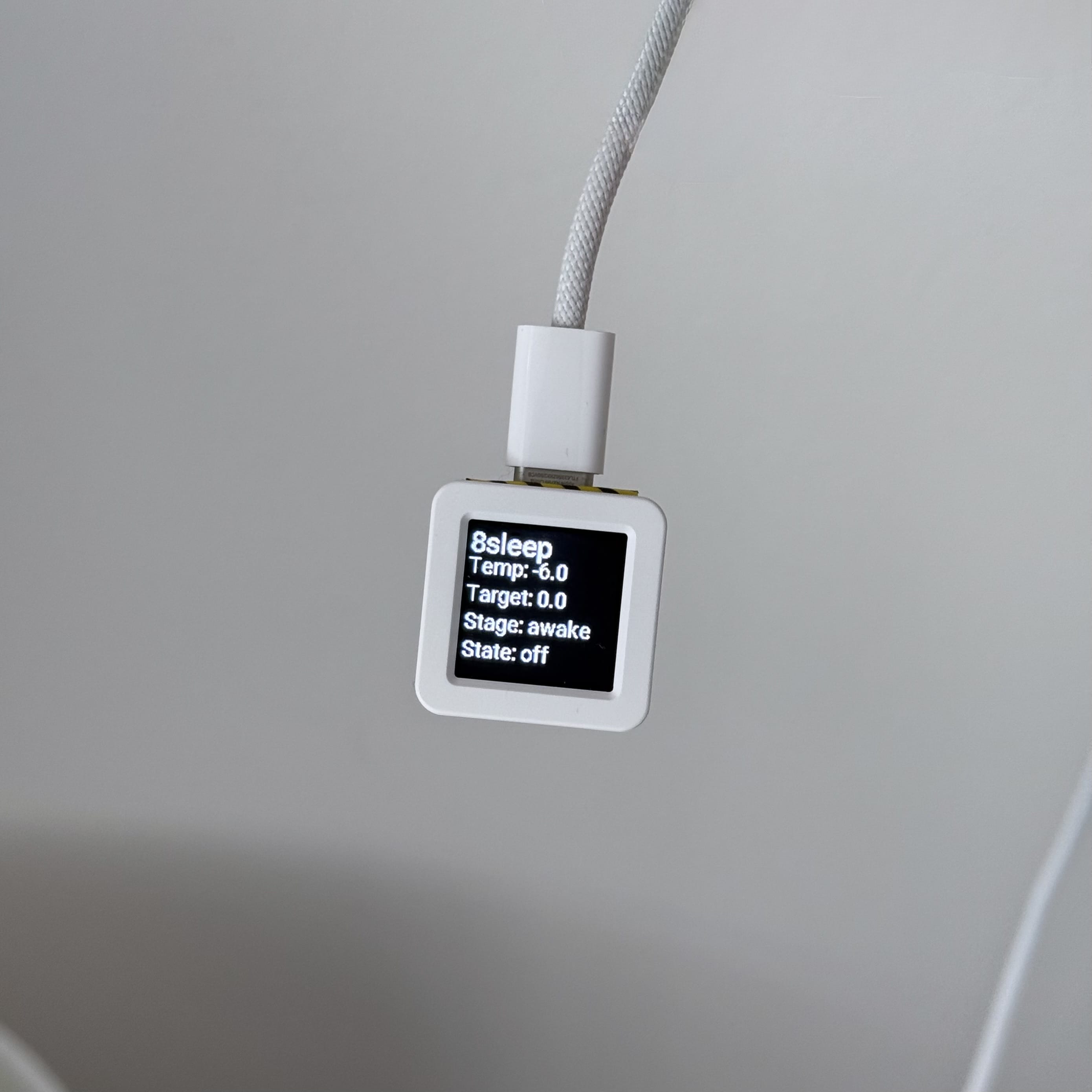

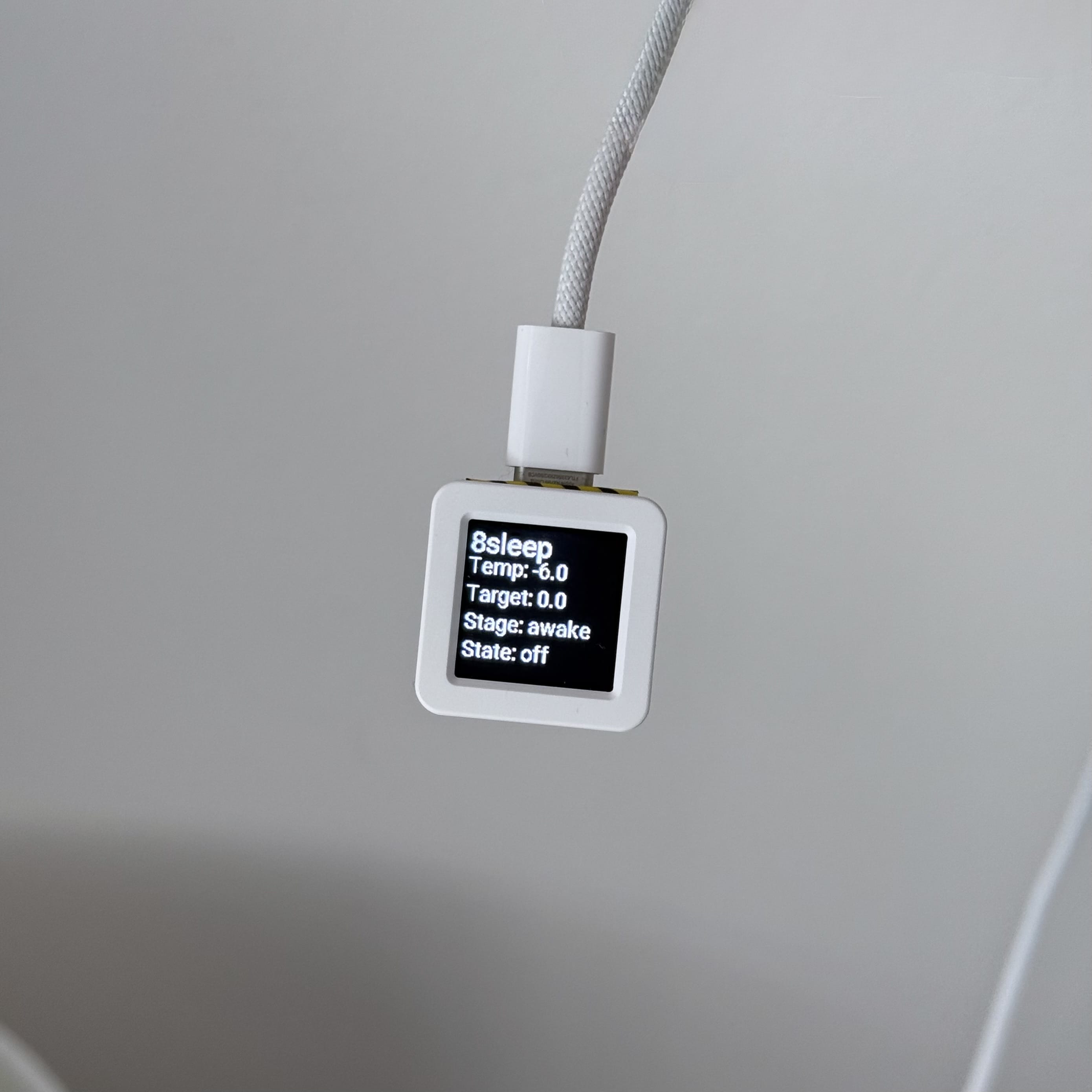

A hardware interface for Eight Sleep temperature control using an ATOMS3 Dev Kit, displaying current bed temperature, sleep stage, and bed state on a small screen with button control for temperature adjustments through Home Assistant.

Effortlessly collect and forward DigitalOcean Kubernetes (DOKS) logs to DigitalOcean Managed OpenSearch for enhanced data analysis and monitoring.

A technical guide for configuring a Ubiquiti Dream Machine to work with Trooli's FTTP service in the UK, including steps to obtain PPPoE credentials and set up IPv6 connectivity by bypassing the provided Technicolor DGA4134 router.

Home Automation

· Language Models

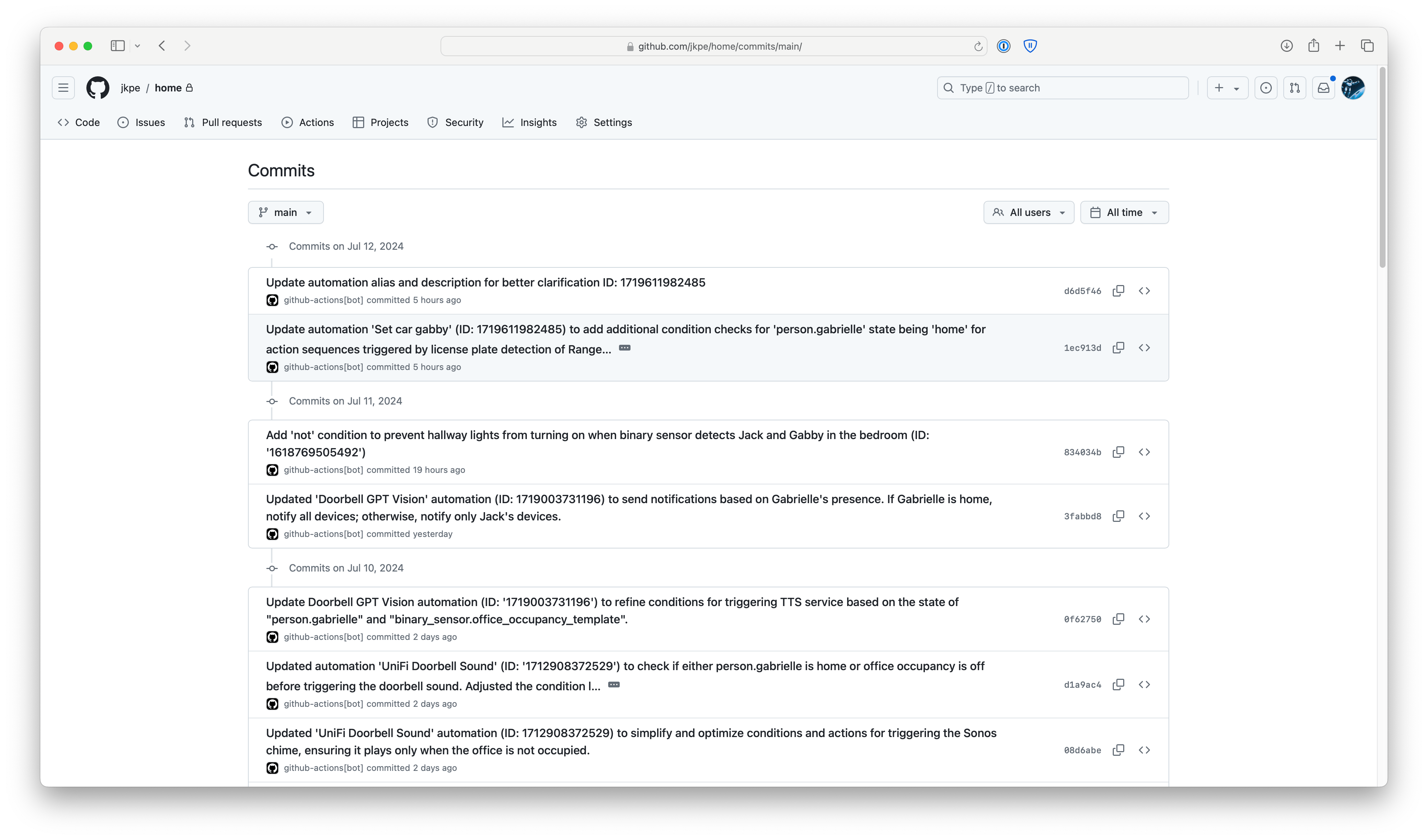

Jul 12, 2024

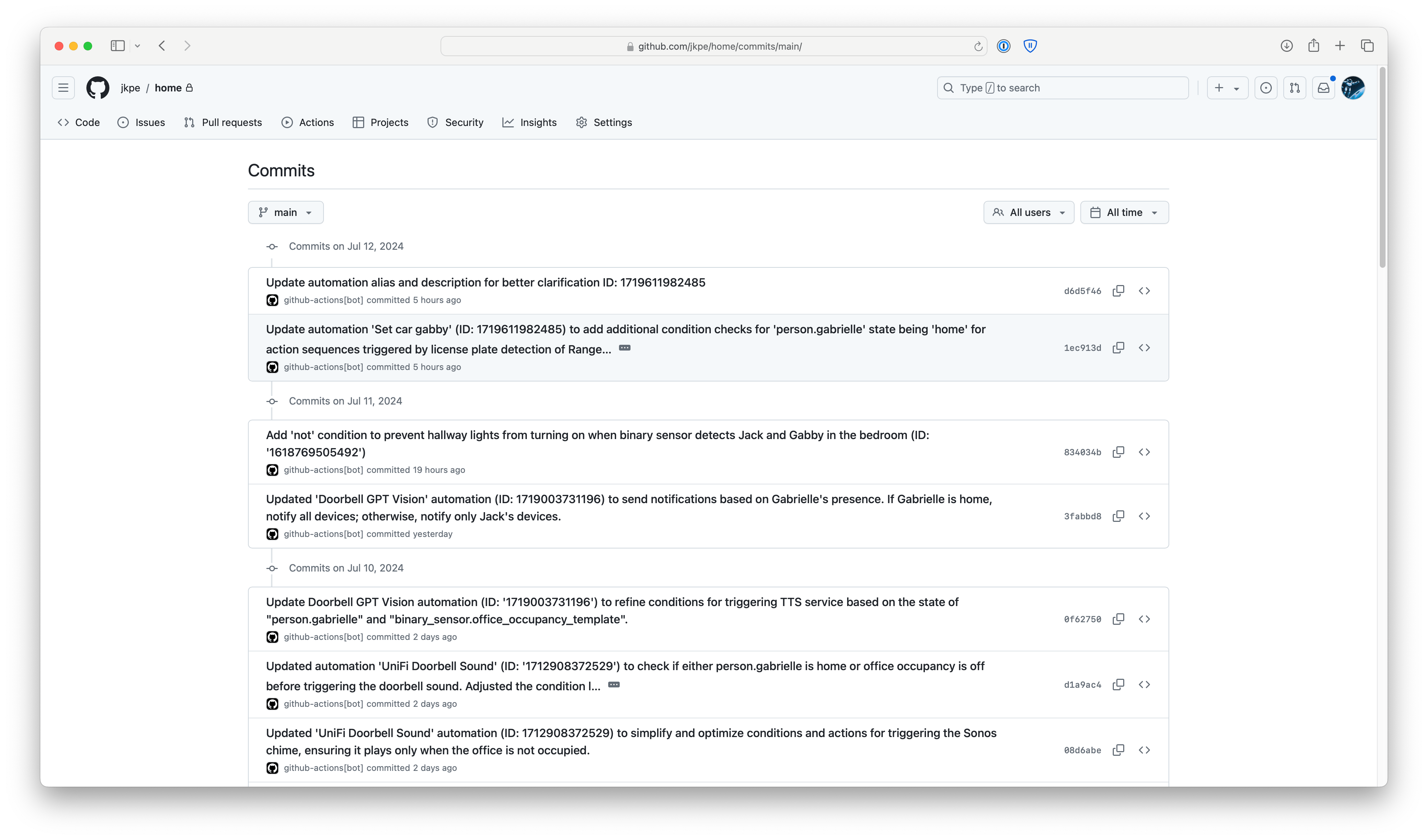

A technical walkthrough of using GPT-4o to automatically generate clear, descriptive titles and descriptions for Home Assistant automations from their YAML configurations.

Home Automation

· Language Models

Jul 7, 2024

A technical walkthrough of using GitHub Actions to automatically track Home Assistant automation changes. The workflow uses AI to generate commit messages, creates versioned releases, and maintains a chronological history of smart home configuration updates.

Home Automation

· Language Models

Jul 6, 2024

A GitHub Actions setup that automatically updates Home Assistant Core and OS versions when new releases are available. Uses Renovate for version detection, Tailscale for secure remote access, and the Home Assistant CLI for executing updates.

I have owned the Sleep Pod 3 for coming up to 30 nights now, here are my initial thoughts.

Home Automation

· Home Lab

Dec 7, 2023

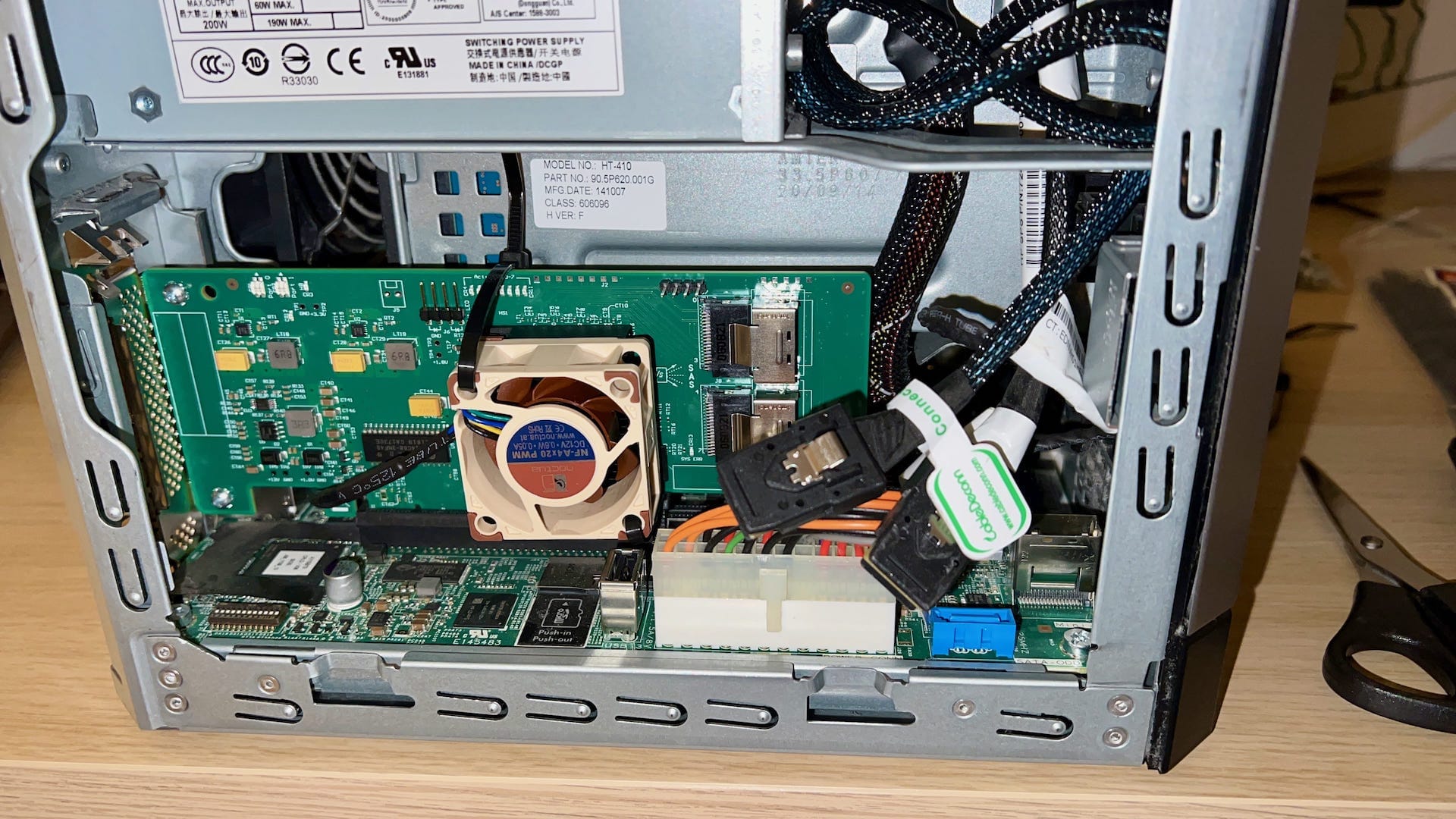

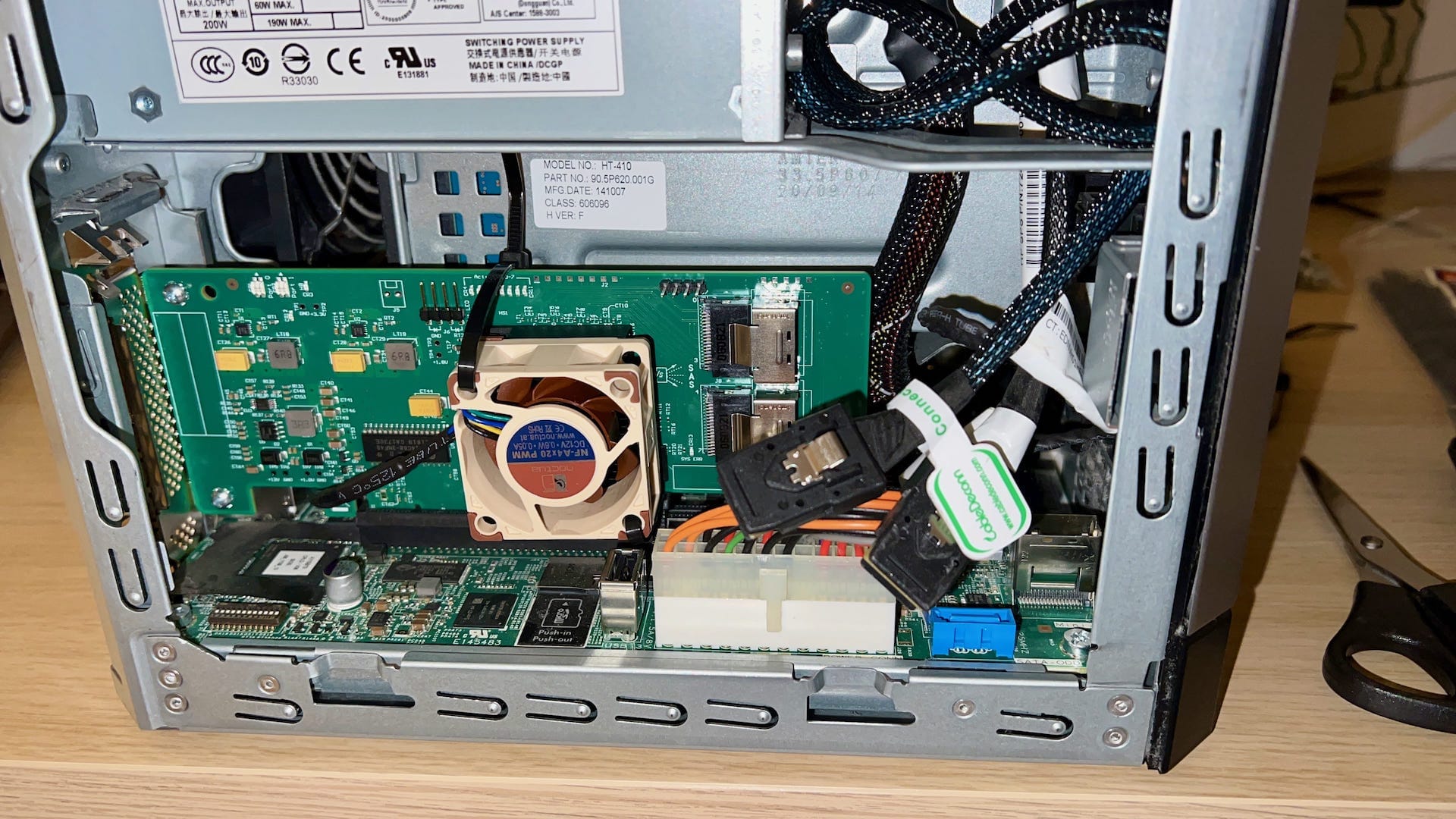

My review of the Home Assistant Yellow 5 having used it for 5 months.

How I used OpenAI GPT-4 and OpenAI Assistants to build a bot that answers questions around DigitalOcean's documentation.

In this post I explain how to introduce Renovate into your docker-compose setup, this allows us to pin docker image versions in our docker-compose but also declare them as dependencies that Renovate will raise pull requests for when a new image is published.

In this guide we will deploy a Rook / Ceph cluster and give some working examples of consuming RWO Block and RWX Filesystem storage.

A guide to repurposing a Dell Wyse 3040 thin client as a WireGuard VPN router with AdGuard for DNS and DHCP services, achieving speeds up to 850Mbps.

Netmaker is a platform for creating fast and secure virtual networks with WireGuard.

I recently switched from using a HP T730 Thin Client running OPNsense as my home router to something more 'off the shelf'... a Ubiquiti Dream Machine Special Edition. My OPNsense router ran absolutely fine and I absolutely loved the reliability and performance it gave me.

Deploying Ghost on DigitalOcean App Platform.

Home Automation

· Home Lab

Oct 8, 2022

I use cloudflared to provide a secure tunnel to my home resources. I struggled to find any instructions for running cloudflared on OPNSense so here is a quick how-to.

Unraid is my favourite NAS OS to run at home. It’s super easy to get setup and administer.

I recently installed a LSI 9211-8i HBA into my HP MicroServer gen8 as a replacement for a PCI-e M2 SSD adapter that kept putting my filesystem into read-only mode.

There are so many ways to build and host a blog for free nowadays. In 2020 I am using Hugo, Github Actions and Cloudflare Workers Sites.

Today I learnt something new. The ‘ring ring’ you hear when you make a phone call isn’t generated by your phone, your network or even the country you’re in, that noise is generated by the receiving ends phone system, and this means it can be customised.

For the past couple of months I have been running a permanent VPN on my iPhone.

Home Automation

· Home Lab

Jul 15, 2019

Home Automation

· Home Lab

May 15, 2019

Unfortunately this means they now proxy all your DNS traffic using a transparent DNS proxy, regardless of what DNS resolver you have set on your client.

In this article I explain how to enable IPv6 connectivity from any desktop in less than 5 minutes.

I recently migrated my blog from Wordpress to Silvrback to Ghost. I was very happy with Silvrback, it’s an excellent writing platform. In the end I got fed up not being able to change how my site looked (no theming), so switched to Ghost.

Organizing my photo collection has been incredibly liberating these past few days. I set out to de-duplicate, sort my photos into some sort of folder structure, shrink their file size down somewhat without affecting the quality of the images and then finally automate this for the future.

Virgin Media why does www.google.com resolve to host-62-253-8-99.not-set-yet.virginmedia.net? What a funny name for a PTR record, but seriously, why are you manipulating my traffic?

I use Everpix to backup and organise every photo I’ve ever taken – all 27,159 of them.

Everything requires a password. A long time ago it bugged me that I couldn’t think of something secure so I visited a web page that randomly generated passwords for you and I used the same 8 character password ever since, it contained 1 number and 7 letters one of which was uppercase.

If there’s one thing I seem to be obsessed with lately and that’s security. I’ve never had any of my accounts hacked but that doesn’t stop me. It happens all the time though and working in IT I should know how to prevent it.